Project links:

Overview

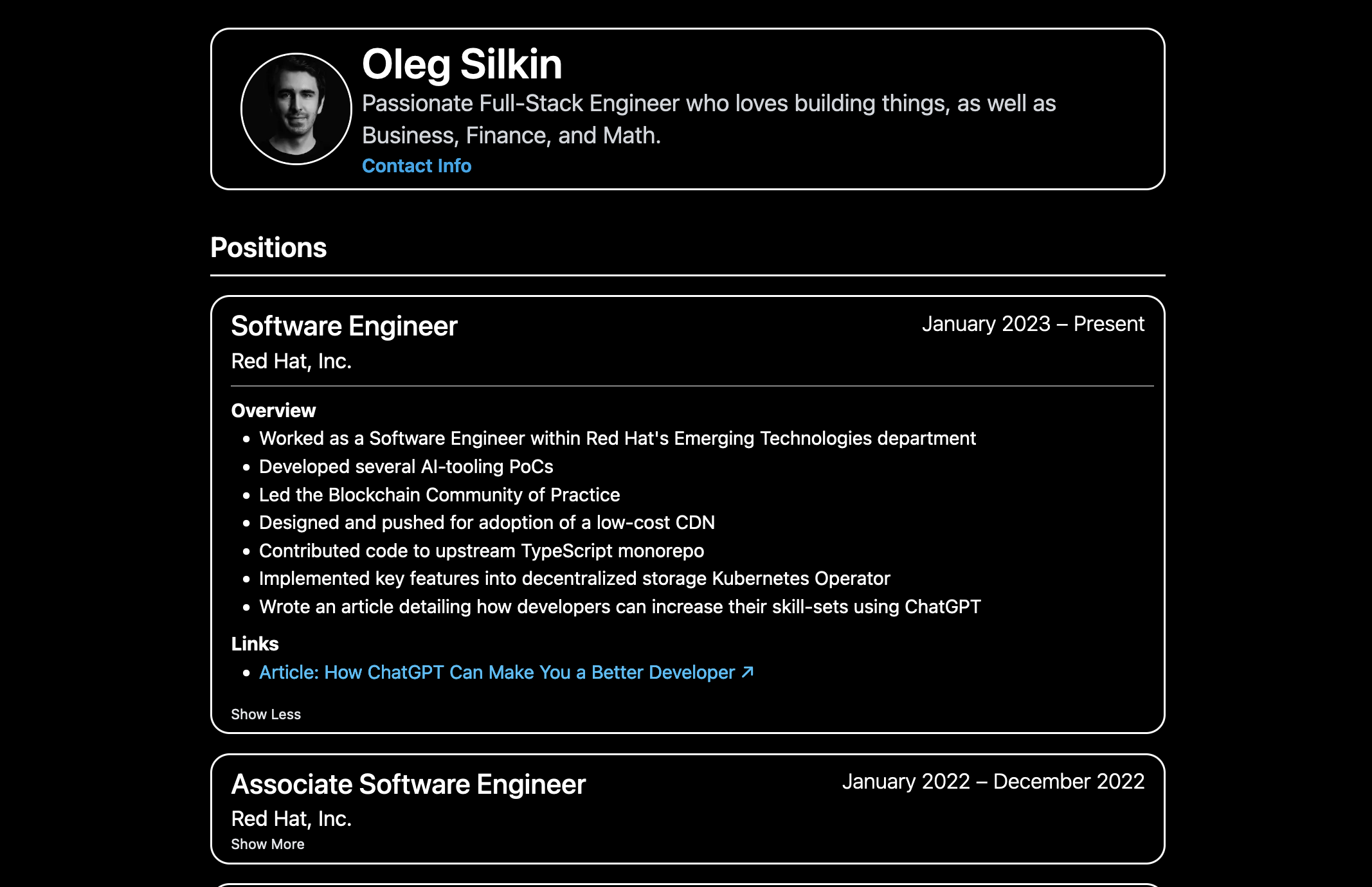

Plicanta is designed to streamline the resume management and job application process. It allows users to input their resume data and displays it publicly in an easily navigable format. When applying for a job, users can create an entry for the new position and add relevant information like job description, company, and position. Plicanta then generates a customizable boilerplate cover letter and lets users select relevant resume data points for the application. Both cover letter and tailored resume can be exported as .docx files.

How it’s made

Plicanta is built using Next.js, tRPC, TailwindCSS, Auth.js, OpenAI, S3 (DigitalOcean Spaces), Prisma, and serverless MongoDB. The monorepo structure, achieved using Vercel’s Turborepo, enables code reuse and functional separation into distinct packages.

What I’ve Learned

S3 Presigned URLs

My favorite part of this project by far has been learning how file-uploading works when a server is working with user data. Rather than taking the naive approach and allowing a user to blankly accept a file upload, the best practice is to have the client request for the server to provide a presigned URL on a given object. Once the client has this URL, they will upload the file directly to S3, and then provide the finished URL to the server.

This is a much safer approach, since it prevents the server from having to store the file in memory, and it also prevents the server from having to store the file on disk. This is especially important when you’re dealing with large files, since it can be very easy to run out of disk space.

Monorepo and Turborepo

One of the cool things that I’ve learned to do on this project is scale out as a monorepo. Vercel’s Turborepo offering has been hugely helpful, since they provide the ability to upload caches to a remote host, so that other build jobs can pull from the cache and automatically fast-forward to the cache’s output. This is super useful because you will frequently be re-deploying when uploading to production, so having the ability to cache the build output is a huge time saver.

Hook Management in React

In order to write proper React code, it’s necessary to split up hooks into smaller pieces of code that can reference each other. This has helped me tremendously, since my code has gone from looking like this:

const MyPage = () => {

const [data, setData] = useState(null);

const [loading, setLoading] = useState(true);

const [error, setError] = useState(null);

useEffect(() => {

setLoading(true);

fetch('/api/my-data')

.then((res) => res.json())

.then((data) => {

setData(data);

setLoading(false);

})

.catch((err) => {

setError(err);

setLoading(false);

});

}, []);

if (loading) return <div>Loading...</div>;

if (error) return <div>Error: {error.message}</div>;

return <div>{data}</div>;

};

to this:

const useMyData = () => {

/* ... */

return { data, loading, error };

};

const MyPage = () => {

const { data, loading, error } = useMyData();

if (loading) return <div>Loading...</div>;

if (error) return <div>Error: {error.message}</div>;

return <div>{data}</div>;

};

Serverless MongoDB

With any kind of stateful application, databases are inevitable. MongoDB provides an amazing developer experience, however running it is generally pricier than other databases from what I’ve seen. A great workaround that I’ve been using has been MongoDB’s Serverless offering in Atlas.

This has saved me a ton of money when running my database in production, since it rarely needs to be online except for the times when it’s being accessed.

Application Logging

One of the most crucial aspects of any application is logging.

Luckily, Axiom provides a great out-of-the-box developer experience with a generous free-tier.

You simply wrap your Next config in a withAxiom statement, and export app vitals from

your pages/_app.tsx file.

Then, any component can simply import { log } from '@axiomhq/client' and log

as they would with their regular logger.